Writing middleware in Go seems pretty easy at first, but there are several easy ways to trip up. Let's walk through some examples.

Reading the Request

All of the middlewares in our examples will accept an http.Handler as an

argument, and return an http.Handler. This makes it easy to chain middlewares.

The basic pattern for all of our middlewares will be something like this:

func X(h http.Handler) http.Handler { return http.HandlerFunc(func(w http.ResponseWriter, r *http.Request) { // Something here... h.ServeHTTP(w, r) }) }

Let's say we want to redirect all requests with a trailing slash - say,

/messages/, to their non-trailing-slash equivalent, say /messages. We could

write something like this:

func TrailingSlashRedirect(h http.Handler) http.Handler { return http.HandlerFunc(func(w http.ResponseWriter, r *http.Request) { if r.URL.Path != "/" && r.URL.Path[len(r.URL.Path)-1] == '/' { http.Redirect(w, r, r.URL.Path[:len(r.URL.Path)-1], http.StatusMovedPermanently) return } h.ServeHTTP(w, r) }) }

Easy enough.

Modifying the Request

Let's say we want to add a header to the request, or otherwise modify it. The

docs for a http.Handler say:

Except for reading the body, handlers should not modify the provided Request.

The Go standard library copies http.Request objects before passing them down the

response chain, and we should as well. Let's say we want to set

a Request-Id header on every request, for internal tracing. Create a shallow

copy of the *Request and modify the header before proxying.

func RequestID(h http.Handler) http.Handler { return http.HandlerFunc(func(w http.ResponseWriter, r *http.Request) { r2 := new(http.Request) *r2 = *r r2.Header.Set("X-Request-Id", uuid.NewV4().String()) h.ServeHTTP(w, r2) }) }

Writing Response Headers

If you want to set response headers, you can just write them and then proxy the request.

func Server(h http.Handler, servername string) http.Handler { return http.HandlerFunc(func(w http.ResponseWriter, r *http.Request) { w.Header().Set("Server", servername) h.ServeHTTP(w, r) }) }

Last Write Wins

The problem with the above is if the inner Handler also sets the Server header, your header will get overwritten. This can be problematic if you do not want to expose the Server header for internal software, or if you want to strip headers before sending a response to the client.

To do this, we have to implement the ResponseWriter interface ourselves. Most of the time we'll just proxy through to the underlying ResponseWriter, but if the user tries to write a response we'll sneak in and add our header.

type serverWriter struct { w http.ResponseWriter name string wroteHeaders bool } func (s *serverWriter) Header() http.Header { return s.w.Header() } func (s *serverWriter) WriteHeader(code int) http.Header { if s.wroteHeader == false { s.w.Header().Set("Server", s.name) s.wroteHeader = true } s.w.WriteHeader(code) } func (s *serverWriter) Write(b []byte) (int, error) { if s.wroteHeader == false { // We hit this case if user never calls WriteHeader (default 200) s.w.Header().Set("Server", s.name) s.wroteHeader = true } return s.w.Write(b) }

To use this in our middleware, we'd write:

func Server(h http.Handler, servername string) http.Handler { return http.HandlerFunc(func(w http.ResponseWriter, r *http.Request) { sw := &serverWriter{ w: w, name: servername, } h.ServeHTTP(sw, r) }) }

What if user never calls Write or WriteHeader?

If the user doesn't call Write or WriteHeader - for example, a 200 with an empty

body, or a response to an OPTIONS request - neither of our intercept functions

will run. So we should add one more check after the ServeHTTP call for that

case.

func Server(h http.Handler, servername string) http.Handler { return http.HandlerFunc(func(w http.ResponseWriter, r *http.Request) { sw := &serverWriter{ w: w, name: servername, } h.ServeHTTP(sw, r) if sw.wroteHeaders == false { s.w.Header().Set("Server", s.name) s.wroteHeader = true } }) }

Other ResponseWriter interfaces

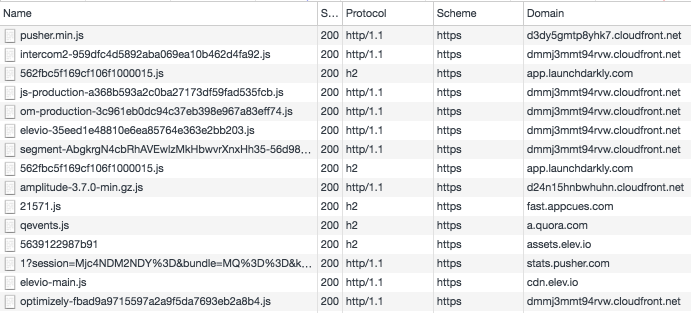

The ResponseWriter interface is only required to have three methods. But in

practice, it can respond to other interfaces as well, for example http.Pusher.

Your middleware might accidentally disable HTTP/2 support, which would not be

good.

// Push implements the http.Pusher interface.

func (s *serverWriter) Push(target string, opts *http.PushOptions) error {

if pusher, ok := s.w.(http.Pusher); ok {

return pusher.Push(target, opts)

}

return http.ErrNotSupported

}

// Flush implements the http.Flusher interface.

func (s *serverWriter) Flush() {

f, ok := s.w.(http.Flusher)

if ok {

f.Flush()

}

}

That's it

Best of luck! What middlewares have you written, and how did it go?

Liked what you read? I am available for hire.